The Y2038 Glitch

09 Jun 2017I had written this article a bit more than a year ago for a college magazine. This topic has been done to death in a Q/A format, so if you're looking for something new about Y2038, you probably won't find it here

Time has always been a finicky thing to deal with. Our perception of time without any stimulus is limited to less than an hour. We would be severely crippled without our abundance of electronic devices synchronized by internet atomic clocks. Unfortunately, these electronic devices on which we're so reliant on are heading towards a time based computer disaster on the same scale as the Y2K bug of this millennium - known as the Y2038 problem.

How do computers measure time and what is the Y2038 bug?

All UNIX derivative systems (including your iPhone or Android smartphone) measure time by counting the number of milliseconds that have elapsed from the 00:00 UTC, 1 January 1970. This day and time is known as the UNIX epoch. While this method of measuring time has served us well for a number of years, it faces a severe limitation - the time_t int variable (an int variable is a part of a program used to hold integral values) in UNIX used to store the number of milliseconds from the epoch is only a 32-bit data type on older 32-bit computers. This means the time_t variable can only store up to 231 - 1 milliseconds before the counter overflows.

Note: 32-bit systems can hold a 64-bit int by splitting it into two words each of length 32-bits. However, the default int size on 32-bit systems is only 32-bits and a 64-bit int has to be coded separately.

So how long is it between before this overflow?

Exactly 231 - 1 milliseconds after 00:00 UTC, 1 January 1970, which is 03:14:07 UTC, 19 January 2038.

So what will happen?

The overflow in the integer value will cause time_t to reset to - (231) milliseconds. This date is 20:45 UTC, December 1901. This is actually a much more serious situation than just your computer showing a funny date. This has disastrous consequences for systems with 32-bit CPUs as BIOS software, file systems, databases, network security certificates and a wide variety embedded hardware will fail to work. With huge critical machinery such as old electrical power stations controlled by a 32-bit computer, an unmitigated catastrophe is unavoidable.

Oh no! Does that mean my laptop and smartphone will stop working as well?

Depends. The 32-bit time_t variable has been deprecated and replaced by a 64-bit time_t variable which will tide us over for the next 2 billion years. 64-bit CPUs are the standard nowadays and most computers running a 64-bit OS on a 64-bit CPU will not face any such consequences from the Y2038 bug as they use the 64-bit length time_t variable. However, smartphones have only recently shifted to a 64-bit process and 32-bit smartphones and computers will be affected if they are not coded for the 64-bit int length. That is of course, only if you are still using it after 23 years from today :P

But how did this even happen? Why couldn't we just use a 64-bit time_t variable in the first place? It's only 4 bytes bigger!

Short answer: Legacy.

Long answer: The UNIX kernel was created in AT&T and Bell labs in the 1970s. There were many competent programmers working on the project such as Dennis Ritchie of C fame. Initially, Bell engineers used a different method for calculating time, but they found that the counter would only work for 2.5 years. As UNIX was planned to be a long project, they changed the time counter to a 4-byte integer. This of course was also limited to a very finite amount of time. However in the 1970s, the consensus was that computers weren't going to stick around for that much longer and a 60-odd year window was "good enough".

Couldn't they just have just used a 64-byte int anyway?

Not really. Computer resources were scarce in the 1970s and 8-bytes of memory to store time_t was more memory than what engineers were willing to give.

Wait a second! Why doesn't time_t use the unsigned int? Surely UNIX wasn't programmed expecting us to go back in time!

The time_t variable uses the signed int to account for dates before the UNIX epoch. Dates before 1 January, 1970 are represented in a negative number of milliseconds which is why an overflow would take the computer's date to 1901.

But this is 2017! Can't we just upgrade the time_t variable to 64-bits?

Yes we can. In all new software and 64-bit operating systems, the time_t variable has been changed to 8-bytes. The problem is that all legacy software compiled with other older compilers may be incompatible for recompiling with a newer compiler for an 8-byte int value. The problem doesn't just stop there. Many embedded systems and microcontrollers (including the popular Arduino ATMega based platform) use 16-bit CPUs which simply cannot support an 8-byte integer in a 2-byte word length. Some hardware applications may use proprietary firmware which won't receive updates.

How can we fix the Y2038 problem?

There isn't any one-solution-fits-all approach for this since it affects several computer architectures, hardware, and software. While it might be easier to just upgrade all old computers with new 64-bit capable ones, there are still many areas where old 32-bit code is still prevalent. Furthermore, the cost of upgrading all the computer hardware is just too exorbitant to be covered.

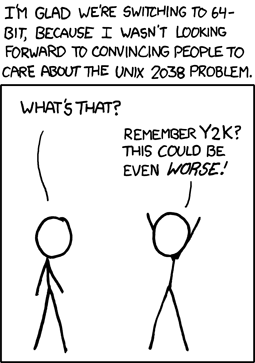

If we look at the lessons learned from the past, the Y2K bug was rectified because of the media hype pressuring businesses to update their software to accommodate 4-digit years. Although the Y2038 bug is much more critical and harder to understand than the Y2K bug, there is hope that the same pressure will bring change.

But for now, only time will tell.

Credits: xkcd